Recently I’m working on a quadcopter UAV project. The on-board electronic system includes 3-Axis Gyro, GPS/INS, AHRS, 5.8Ghz FPV transmitter, GoPro Hero camera and a small Gumstix computer.

After I finished assembling and tuning all the parts, I’ll test autonomous flight outside and upload the HD videos recorded by GoPro Hero camera. The future experiments include vision based auto-landing on moving vehicles, large-scale 3D terrain generation using SFM and vision based tracking of a specific ground vehicle. So, please check my blog for the exciting videos to come!

[Updated 5/12/12]

Added Compass/IMU/GPS, central controller, 5.8Ghz FPV system and AHRS system, ready to tune the controller on next Wednesday.

Thanks for Nate at Hobby Hut for helping me to tune the quadcopter. If you live close to the tri-state area and have problems with RC stuff, go to Hobby Hut, Eagleville, PA and ask for Nate, he’s very helpful.

[Updated 11/15/12]

Attended a meeting in Villanova University with the AUVSI local chapter members. This is a very good chance to know and communicate with local people doing UAV activities.

Thanks for the presentation by Mr.Carl Bianchini and the opportunity provided by Mr. Steven Matthews. I will be giving a presentation on Jan. 17 or 24, 7pm, CEER 210 Conference Room , Villanova University.

[Updated 6/1/13]

How are you guys doing ? I was busy preparing the PhD prelim this semester and working on the DARPA Robotic Challenge, so don’t have much time testing the quadcopter. Today we tested RTL (return to launch) successfully:

I will add more videos and pictures later.

[Updated 6/4/13]

Took a flight in the UD campus:

Rectification test at the UD football field:

Raw image:

Rectified:

Probably I’ll try to mount another GoPro and do stereo matching + visual odometry based large terrain reconstruction. This method is simple and works well, and then we can generate a 3D model of UD from the point cloud files.

Probably I’ll try to mount another GoPro and do stereo matching + visual odometry based large terrain reconstruction. This method is simple and works well, and then we can generate a 3D model of UD from the point cloud files.

[Updated 6/5/13]

Took a flight at the Chesapeake bay:

[Updated 6/8/13]

Tested in Winterthur:

Testing the UAV for scientific research purposes,maximum altitude on AHRS is set to 350 ft to follow FAA/AMA’s 400 ft limit.

[Updated 6/25/13]

Yesterday I went to the DARPA Robotic Challenge boot camp orientation at Drexel University (I’ll be working on the DRC throughout this summer in Drexel). I did a 360 degree spin at 400 ft over Drexel campus and generated a fan panorama directly from the video input (click to see the full res images):

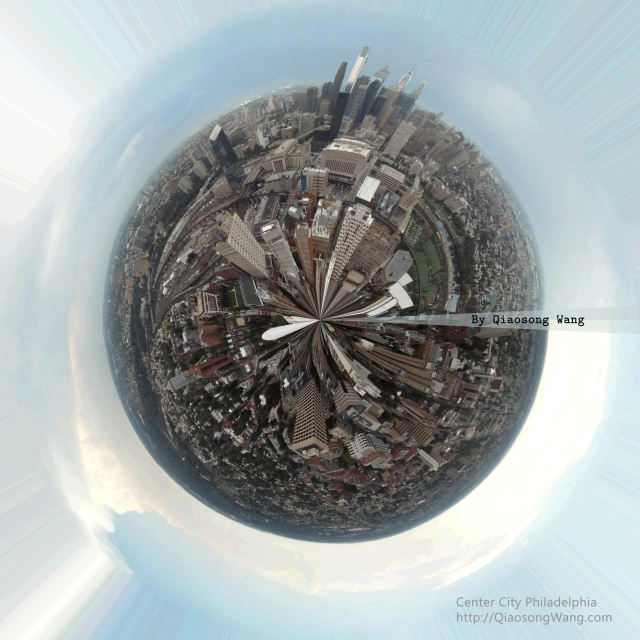

I also turned this into a polar panorama:

This is actually not a very easy task. As you can see from the video, the quadcopter has to incline its body to fight against the wind, since I don’t have a gimbal, the view of the camera is not level. and the rotating axis is somewhere outside of the quadcopter itself (makes it much more difficult than putting a camera perfectly level on a tripod and spin the camera around its own Z axis). Therefore, feature matching is needed to recover the pose and I can use that information to unwrap the images and blend them together. Anyway, later I will do stereo matching and reconstruction, any experiment on the UAV multi-view geometry and camera calibration at this point is helpful.

[Updated 8/4/13]

Initial results on my SFM and dense mesh reconstruction algorithm (all input images are from the above youtube video):

Center city Philadelphia:

U Penn Franklin field area:

As you can see, the algorithm only works well on close-by buildings. The disparity of far-away object pixel is too small for the algorithm to calculate depth. Another problem is that I’m only doing self-spins on the UAV, so basically I only get translation of the feature points instead of rotation, which makes it very hard to recover point geometry. Later I’ll fly the UAV around a specific target with a higher resolution camera and see what happens.

[Updated 8/25/13]

A Pennsylvania based company has shown interest in my software. I will improve the algorithm and test on their UAV. Once more accurate 3D mesh results are generated, we can prepare materials to apply for a US patent.

[Updated 10/1/14]

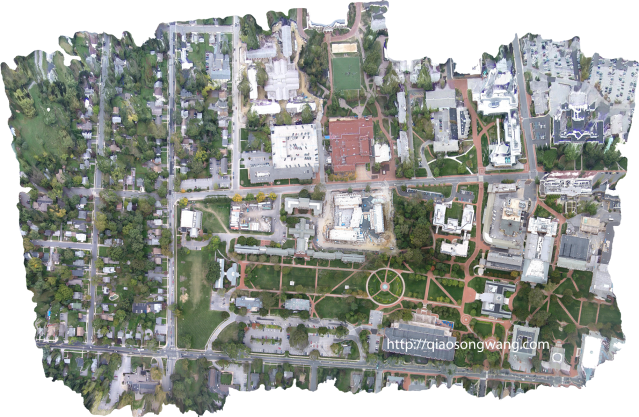

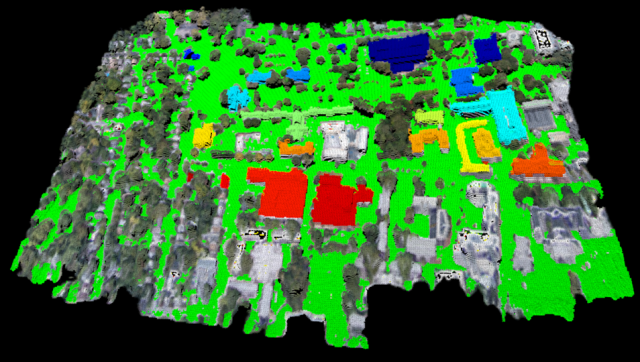

The parameters of the 3D reconstruction program is almost perfectly tuned. Here are some input sequences and reconstruction result of the University of Delaware main campus:

Sample input sequence:

Top-down view:

You can view the 3D model of UD on Verold or Sketchfab.

[Updated 10/2/15]

New model with improved texture mapping:

Open source packages used:

Looks nice. I’d like to see the onboard footage.

I am looking into using the GoPro for the same application and I was wondering if you had success with the SFM. My concern is the lens has too much distortion for a valid solution. I would be interested in hearing about, or seeing your results!

Hey Matthew,

You can always do single camera calibration and rectify the video frames, it’s really simple, opencv has all the source code ready:

http://docs.opencv.org/doc/tutorials/calib3d/camera_calibration/camera_calibration.html

Probably I’ll post my code for single and multiple camera calibration and rectification in the future.

Thank you,

Qiaosong

An impressive share, I simply given this onto a colleague who was doing a bit of evaluation on this. And he actually bought me breakfast as a result of I discovered it for him.. smile. So let me reword that: Thnx for the deal with! However yeah Thnkx for spending the time to discuss this, I feel strongly about it and love reading more on this topic. If attainable, as you change into experience, would you mind updating your blog with extra particulars? It’s extremely useful for me. Large thumb up for this blog submit!